(We needed this to derive the conditional distribution of a multivariate Gaussian).

Consider a matrix product

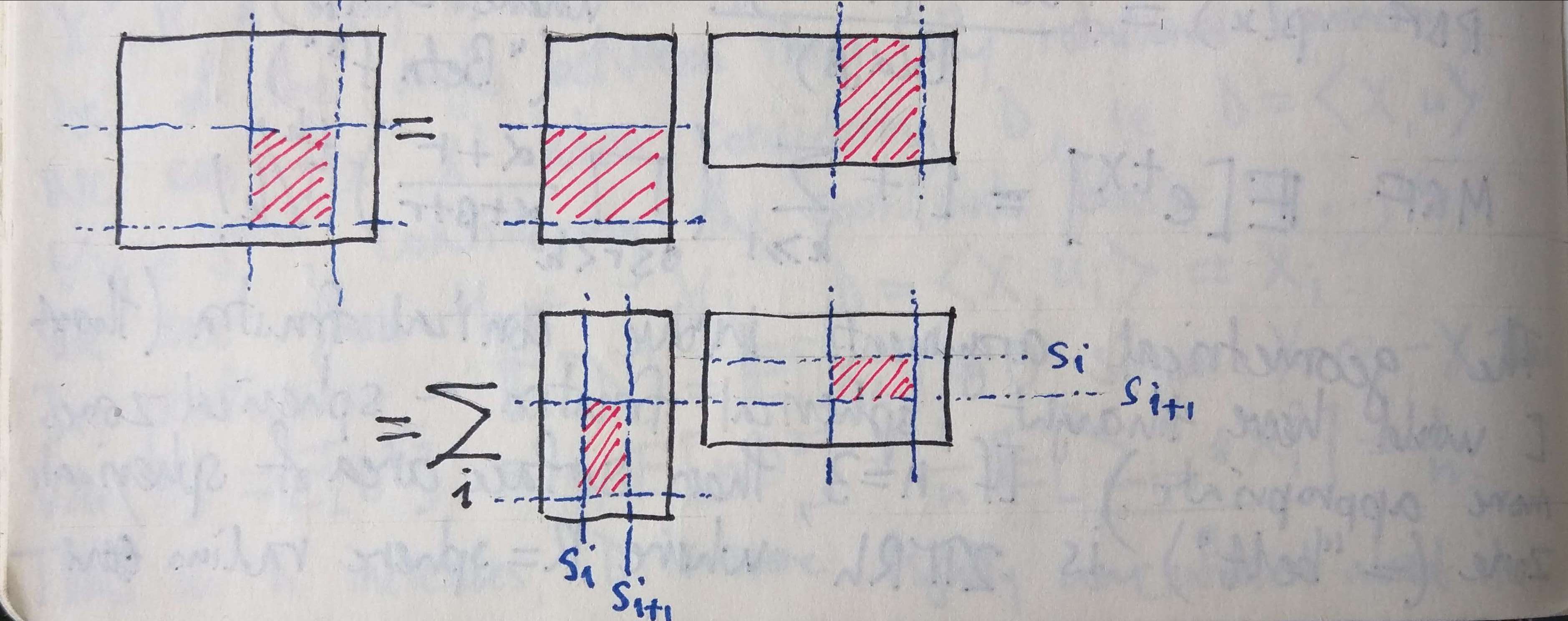

Pictorially, we have the following:

Arithmetically, this is easy to prove by considering the formula above for the components of the product. The partitioning of the outer dimensions comes for free, while the partitioning of the inner dimension just corresponds to partitioning the summation:

Zooming out to a categorical level, we can see that there is nothing peculiar about this situation. If, in an additive category, we have three objects

then this “block decomposition of matrices” finds expression as a formula in

2 Replies to “Block Multiplication of Matrices”